Introduction

You made it through the outage. Systems are back online, customers are happy and your team can finally breathe. But here’s the thing nobody wants to answer: could you handle the same incident better tomorrow?

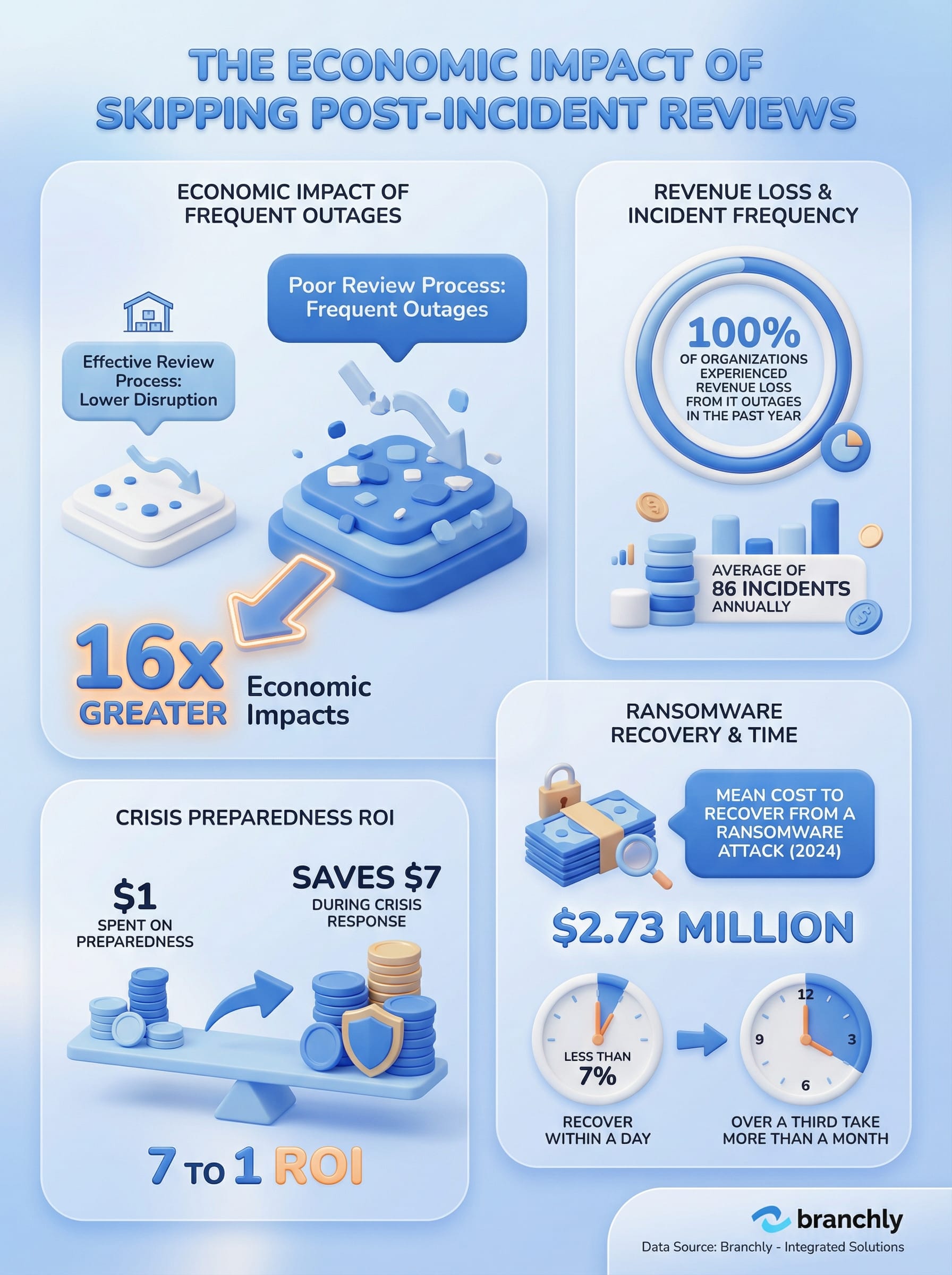

The difference between companies that improve and those that repeat the same mistakes comes down to one thing: post-incident reviews. A 2025 survey found that 100% of organizations had revenue loss from IT outages in the past year, with an average of 86 incidents. Companies with frequent outages face economic impacts 16 times higher. Skip the review, skip the chance to break that cycle.

Why Most Post-Incident Reviews Fail

Too many reviews become blame sessions or box-checking exercises. Someone writes a report, it goes into a folder, and the same problems resurface three months later. Thing is, teams actually care. They just approach reviews as paperwork instead of learning opportunities.

Companies that skip proper reviews pay for it. Human error causes downtime in over two-thirds of organizations, and those without solid incident response plans are 4.4 times less resilient. When you don’t analyze what happened, you leave the same problems in place.

The Cost of Skipping Reviews

The mean cost to recover from a ransomware attack hit $2.73 million in 2024, up nearly $1 million from the year before. Less than 7% of companies can recover within a day, while over a third take more than a month.

So, the review needs to happen while details are fresh but after the immediate pressure’s gone. Wait too long and people forget key details. Rush it and you miss important insights because everyone’s still in crisis mode.

What a Proper Review Actually Covers

Start with the facts. Build a timeline from detection to resolution. What happened, when it happened, who was involved, what systems got hit. This isn’t about judging anyone, it’s just documentation.

Then dig into root causes, go past the immediate technical failure to find what’s really going on with processes or configurations. Use methods like the 5 Whys or Fishbone Diagrams. You’re trying to understand not just what broke but why it was allowed to break in the first place, was there a process gap, a training issue, a configuration nobody caught?

Separate Problem Management

Some organizations handle root cause analysis separately through problem managemant teams. The post-incident review becomes the starting point, but detailed technical analysis continues afterward with specialists.

Next up is to put your response to the test - how swiftly did teams jump into action on hearing the bad news? Was the word clear to all about what was going on? Were escalation procedures triggered as they should have been? Were the right people clued in at the right time? The answers to these questions will be a pretty good gauge of how well your plans stood up to reality.

Get all the stakeholders involved, and in honest detail, get to the bottom of this one. Tech teams, upper management & the business units are all going to see things from a different angle, and their takes will add up to the whole picture. And don't forget to tap into the insights from the frontline staff who've been dealing with customers, the people who will let you know what the executives are missing.

Turning Findings Into Something That Actually Changes

Doing analysis without any actual follow-through is like nothing at all. Every finding should go on to a clear recommendation, no matter if its a tweak to a process, some new tech, or more training on the side. Be as specific as you possibly can about what needs to get done, and by whom.

So assign each action-item to someone with a clear deadline, and then make sure to keep tabs on where things are at. Unfortunately, that's where a lot of companies start to stumble - they put the effort into great reports, but nobody owns the work they're supposed to be doing.

Build a central repository of past problem- incidents & their solutions. When another problem shows up that you've seen before, teams can check out what worked last time around, get a quick fix in place, and stave off making the same old mistakes over & over again. Every dollar you spend on disaster prep ends up saving you $7 when the crisis actually hits.

The Blame-Free Rule

Create an environment where people can speak honestly without fear. The goal is learning, not punishment. When teams fear blame, they hide problems instead of fixing them.

(schedule those follow-up meetings to check that all the action items got wrapped up) verify that improvements aren't just on paper, that they actually work in a crunch. Update those incident response plans, business continuity plans, and disaster recovery plans - take what you learned and put it to use.

Putting your learning into Practice

Post-incident improvements are all well and good, but they're really only worth it if they hold up when it counts. Run some tabletop exercises once a year at the very least, to put those shiny new procedures to the test. Simulating these kinds of situations gives teams a chance to make decisions without any real-world consequences.

(choose scenarios based on real incidents you've actually handled, then mix things up to add some extra realism - what if the very same outage happened at the absolute worse possible moment ? or what if your go-to person was out of commission for some reason ?) you'd be surprised how well these exercises show you where you're still coming up short, things that get glossed over in the course of normal operations.

Practice Stabilization

Only restore systems after verifying integrity and confirming backups are clean. Resume operations gradually to avoid new errors. Make sure your change management process is ready to handle fixes quickly.

Special attention should be paid to communication protocols - let's make sure those are up to speed. Update our guidelines based on whatever hard-won lessons we learn from this. Box-test the standard message templates for employees, customers, vendors and regulators, to make sure we're covering all our bases. Because if a crisis falls apart at the seams, we can end up losing 15% of our market share overnight, but with a bit of pre-emptive planning, we can actually cut our recovery time in half - 53% faster is a pretty big deal.

Pivoting Our Approach to Reviews

Make it a habit to do after-action reviews all the time - don't rely on having a spare moment. Set up the auto-schedulers so they fire off a review every time one of the big incidents pops up. Define what constitutes a big incident so we all know when an extra review's needed - teams need to be on the same page.

Do it the same way every time, so we can get some real insight from looking at our reviews over time. Use one template for all reviews, rather than trying to wing it each time - that way we can identify the trends that are really important. Are certain systems just consistently letting us down? Are there specific teams that are always struggling with the same problems? The template makes it all visible.

Complete Post-Incident Review Framework

Five key steps that turn incidents into improvements

Document every step of the way - time stamp each event, decision, approval and action taken during the incident. This audit trail does a couple of important things : it helps you make sense of what went down and its second big benefit is that it helps show regulators you've been playing by the rules. The massive cost of a data breach in the US is currently a whopping $9.36 million - properly documenting will help reduce your liability and also prove to others you've done your due diligence.

Then get the word out internally. When one branch or location has an incident - even if you have multiple - other locations can look at the outcome and take something away from it. This is particularly valuable for big companies with a lot of different sites as the same risks are present at every one of them.

The Real Value of Reviews

Post incident reviews can turn what could have been a disaster into a valuable learning experience. What might have felt like a crisis gets turned into a chance to improve procedures, strengthen your defenses and build some resilience. Companies that get this right - that do reviews properly - tend to reduce downtime, keep their customers a lot happier and overall see a decrease in operational risk.

Going through a review also helps build a mindset of continuous improvement. Teams see that the work they put into this leads to real change and that the company values that. You get the benefit of people not being afraid to talk about what happened, because in the end you dont get nailed for making mistakes. All of this adds up to make dealing with future incidents a whole lot easier.

Start small if you need to. Pick one recent incident and go through the process properly - make a timeline of what happened, find out what went wrong, see how you responded and create a list of what you need to do next. Track those things till they're done, then pick the next one. The more you do this the better at it you'll get and the more benefits you'll see over time.

Summary

Post-incident review - the difference between companies that can learn from their mistakes and those that just keep making the same ones. To make the most of it, you've got to get the process right. That means structuring it in a way that fosters learning, not finger-pointing. Start with a clear timeline of what went down, then get to the real meat - what went wrong and why. Check in on how you responded to the crisis and turn what you've learned into clear, actionable steps - not just vague ideas - with specific owners and deadlines attached. Then test those improvements through regular "what if" exercises. Make reviews a standard part of your routine after every big incident - and by big incident, we mean anything that shook the foundations. The aim here is progress, not perfection. Each review should leave you a tiny bit better equipped to handle the next crisis that comes your way.

Key Things to Remember

- ✓Organizations that skip post-incident reviews face economic impacts 16 times greater than those that learn from incidents.

- ✓Proper reviews need a clear timeline, root cause analysis, response evaluation, stakeholder feedback, and specific action items with owners.

- ✓Create a blame-free environment so teams share honest insights instead of hiding problems.

- ✓Track action items to completion and verify improvements through follow-up meetings and regular testing exercises.

- ✓For every dollar spent on crisis preparedness, organizations save seven dollars during actual crisis response.

How Branchly Can Help

Branchly's Intelligence Layer actually runs an automatic review of every response time, skipped step and bottleneck, every single time there's an incident or a drill. It keeps a super detailed log of every single action, every timestamp & every approval - that's what gives you a complete audit trail. And after each response, Branchly starts making some recommendations : maybe you should cut out steps that aren't working, or reorder how things are happening, or just tweak the timing in your playbooks. And this is where it gets really cool : all this just happens automatically, without anyone having to manually go through the numbers and figures. So your team is getting super-timely insights that they can actually act on, and your playbooks - they're constantly getting better because they're based on hard data of how they've really been performing.

Citations & References

- [1]Resilient by Design: Fundamentals of Business Continuity and Incident Response - YHB CPAs & Consultants yhbcpa.com View source ↗

- [2]

- [3]Comprehensive Guide to Business Continuity Management: Strategies & Best Practices USA protechtgroup.com View source ↗

- [4]

- [5]

- [6]The Most Critical Challenges in Incident Management You Need to Overcome - Expert Crisis Management and Disaster Preparedness | Early Alert earlyalert.com View source ↗

- [7]